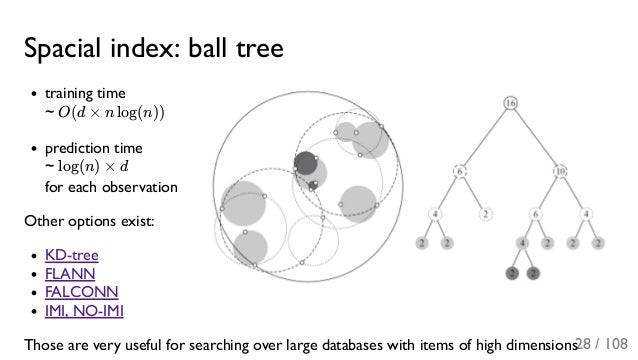

Ball Tree In Knn

Ball tree in knn. This technique is improvement over knn in terms of speed. Standard euclidean distance is the most common choice. A brute force algorithm implemented with numpy and a ball tree implemented using cython. Also provided is a set of distance metrics that are implemented in cython.

You can vote up the examples you like or vote down the ones you don t like. The leaves of the tree contain relevant information and internal nodes are used to guide efficient search through leaves. The following are code examples for showing how to use sklearn neighbors balltree they are from open source python projects. N samples is the number of points in the data set and n features is the dimension of the parameter space.

This is a python cython implementation of knn algorithms. O kn log n if n points are presorted in each of k dimensions using an o n log n sort such as heapsort or mergesort prior to building the k d tree. K d trees for low dimensional data inverted indices for sparse data and fingerprinting. Parameters x array like of shape n samples n features.

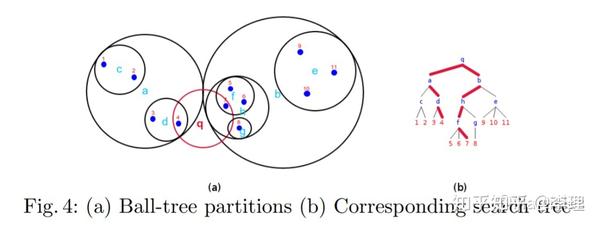

Removing a point from a balanced k d tree takes o log n time. Sklearn neighbors balltree class sklearn neighbors balltree x leaf size 40 metric minkowski kwargs. A ball tree is a binary tree and constructed using top down approach. An important application of ball trees is expediting nearest neighbor search queries in which the objective is to find the k points in the tree that are closest to a given test point by some distance metric e g.

The concept of ball tree. An overview of knn and ball tress can be found here. Inserting a new point into a balanced k d tree takes o log n time. Balltree for fast generalized n point problems.

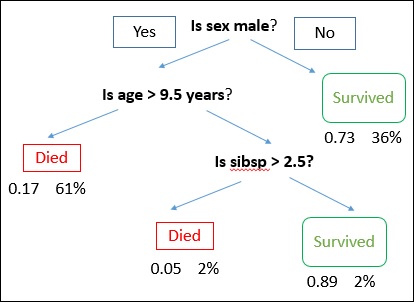

There is no exact algorithm for doing this quickly but we do have approximate methods. Neighbors based methods are known as non generalizing machine learning methods since they simply remember all of its training data possibly transformed into a fast indexing structure such as a ball tree or kd tree.